Covered Contents

ToggleIntroduction: Why Neural Networks Matter in 2025

Neural networks are changing the way machines understand and respond to the world. Built to work like the human brain, they’re a key part of today’s artificial intelligence (AI). Whether it’s helping doctors find problems faster, powering voice assistants like Siri or Alexa, or guiding self-driving cars, neural networks are behind many of the smart tools we use every day.

In this guide, we’ll explain what neural networks are, how they work, the main types you should know about, and how they’re being used in real life, especially in areas like edge computing, cybersecurity, and autonomous systems.

What Is a Neural Network?

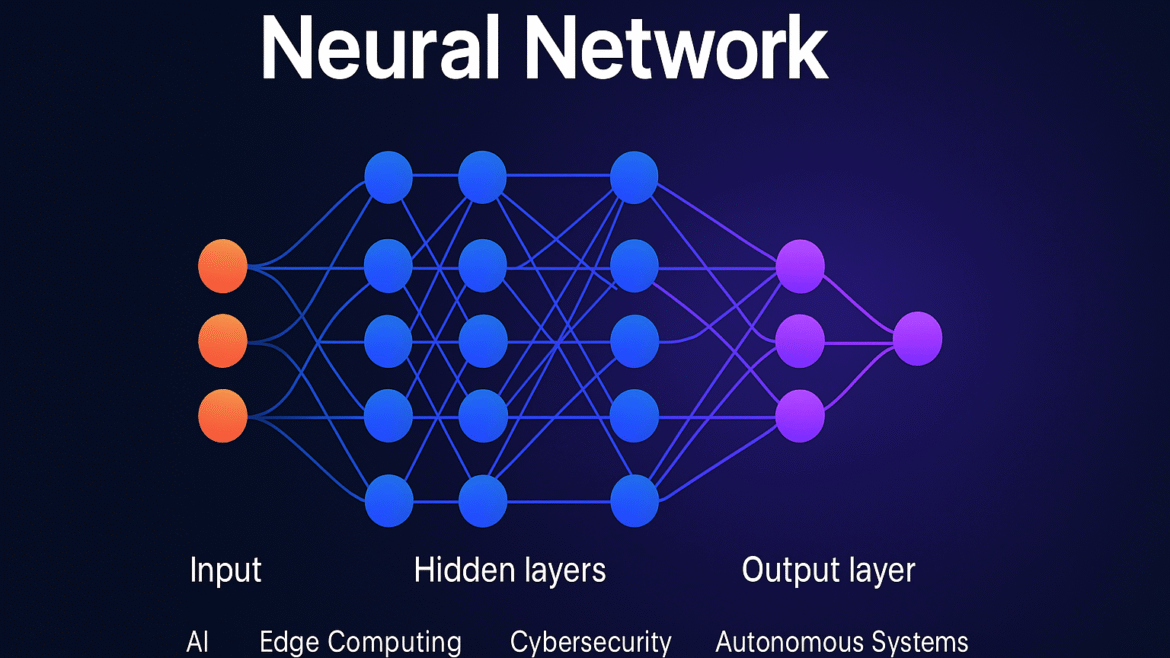

A neural network is a machine learning model designed to recognize patterns. It mimics the structure of the human brain with neurons (nodes), synapses (connections), and layers to process and learn from data. A neural network is an artificial intelligence (AI) model that allows computers to learn from data, like the way a human brain functions. Similarly to our brains utilizing networks of neurons to process information, neural networks utilize digital units—neurons or nodes—to analyze patterns and make decisions. They are organized in layers and link to each other, creating a system that can learn patterns in data, learn from examples, and make predictions or classifications. It is the basis of deep learning, a form of machine learning (ML) found in many contemporary AI systems.

Key Terms Explained:

Neuron (Node): The basic unit that receives input and passes an output

Weights: Values assigned to inputs to determine importance

Bias: Adjusts the output to improve learning accuracy

Activation Function: Decides whether a neuron should be activated.

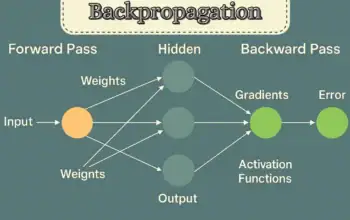

A neural network learns by adjusting the weights and biases through training, often using a process called backpropagation.

How Do Neural Networks Work?

You can think of a neural network as a series of steps that help a computer learn from data:

➥Input Layer: This is where the raw data goes in—like a picture, a sentence, or sensor readings.

➥Hidden Layers: These layers do the “thinking.” They look for patterns or important details in the data.

➥Output Layer: This gives the final answer, like labeling an image, making a prediction, or choosing a category.

When training the network:

➤The data moves through all the layers from start to finish (this is called forward propagation).

➤The network’s answer is compared to the correct answer.

➤If it’s wrong, the network adjusts itself by sending the error back through the layers (backpropagation) to improve.

This process repeats many times until the network gets good at making accurate predictions.

Main Types of Neural Networks

Feedforward Neural Networks (FNNs)

The most elementary form of neural network, in which data moves in one direction—from input to output—with no feedback loops. FNNs are generally applied to simple classification and regression problems.

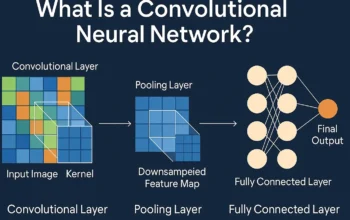

Convolutional Neural Networks (CNNs)

Primarily aimed at image-based problems, CNNs employ convolutional layers to extract spatial features and patterns automatically. They are extensively used in image classification, object recognition, and computer vision.

Recurrent Neural Networks (RNNs)

Best suited for sequential or time-dependent data, RNNs include loops that enable information to carry across time steps. Typical uses are natural language processing, speech recognition, and time series analysis.

Long Short-Term Memory Networks (LSTMs)

A variation of RNNs, LSTMs can learn long-term dependencies and avoid the problem of vanishing gradients. They are particularly suited for applications such as machine translation, sequential prediction, and voice recognition.

Generative Adversarial Networks (GANs)

Made up of two adversary networks—a discriminator and a generator—GANs are employed to create realistic synthetic data. They are best at producing images, video, and art, as well as data augmentation.

Radial Basis Function Networks (RBFNs)

These networks utilize radial basis functions as activation units and are commonly utilized for function approximation and classification of patterns. RBFNs are particularly beneficial in systems with quick response and learning times.

Selection Guidelines

|

Data Type |

Recommended Architecture |

|

Structured/tabular |

FNN |

|

Image/grid-based |

CNN |

|

Sequential/temporal |

LSTM |

|

Generative tasks |

GANs |

|

Pattern recognition |

RBFNs |

This framework enables efficient matching of neural architectures to problem domains, balancing performance with implementation complexity. For sequential data exceeding LSTM capabilities, transformer architectures offer enhanced parallelism.

Real-World Applications of Neural Networks

➤Edge AI and Neural Networks

With the rise of edge computing, neural networks can now run on devices like smartphones, drones, and industrial machines.

Examples:

➩Predictive maintenance in factories

➩Real-time object detection in autonomous vehicles

➩Personalized recommendations on mobile devices

➤Healthcare

Neural networks are transforming diagnostics and treatment planning:

➪Detecting tumors in radiology scans

➪Analyzing patient data for predictive analytics

➤Cybersecurity

Used to identify and respond to threats rapidly :

➪Intrusion detection systems

➪Malware classification

➤Finance

Automated trading and fraud detection rely heavily on neural networks:

➪Forecasting market trends

➪Flagging suspicious transactions

➤Natural Language Processing (NLP)

Neural networks enable machines to understand and generate human language:

➪Chatbots

➪Language translation tools

➪Text summarization

Advantages of Neural Networks

➧Adaptability: Neural networks continuously improve by learning from new and evolving data, making them well-suited for dynamic environments.

➧High Accuracy: They perform exceptionally well on complex, high-dimensional problems where traditional models may struggle.

➧Automation: Neural networks automatically extract relevant features from raw data, significantly reducing the need for manual feature engineering.

Challenges and Limitations

|

Challenge |

Description |

|

Training Time |

Deep neural networks often require large datasets and extended training periods. |

|

Computational Cost |

High-performance hardware (e.g., GPUs or TPUs) is needed for efficient processing. |

|

Explainability |

Neural networks are often seen as “black boxes,” making it difficult to interpret their decisions. |

|

Overfitting |

Models may learn noise or specific patterns in training data, reducing their ability to generalize to new data. |

People Also Ask

Q- What is the difference between AI and neural networks?

AI is the broader concept of machines being smart. Neural networks are a subset of AI algorithms inspired by the human brain.

Q- Can neural networks work on small devices?

Yes, with Edge AI, optimized neural networks can run on smartphones, sensors, and embedded systems.

Q- Are neural networks better than traditional algorithms?

For complex, unstructured data (like images or audio), neural networks generally outperform traditional algorithms.

Q- How long does it take to train a neural network?

It depends on the dataset, architecture, and hardware. Some networks train in minutes; others take days.

Q- What programming languages are used to build neural networks?

Common languages include Python, R, and JavaScript—especially with libraries like TensorFlow, PyTorch, and Keras.

Expert Insights

Neural networks are now the foundation of contemporary smart systems, fitting naturally into a wide range of industries—from edge computing and self-driving cars to cybersecurity and medicine. Their high flexibility and accuracy make them capable of addressing high-dimensional, complicated problems in different industries.

As hardware breakthroughs continue and training procedures become optimized, neural networks will increasingly spearhead innovation, making artificial intelligence even more powerful across all industries.

Looking Ahead

“Over the next five years, edge-deployed neural networks will be as common as mobile apps. We are moving into an age where artificial intelligence is infused in our lives naturally, enabling devices to make smart decisions locally and in real-time.” —

This vision highlights a world where AI is no longer just a means to an end but is woven into the fabric of our existence, constantly learning and evolving to serve our purposes.