INTRODUCTION

Edge virtualization is now a foundation facilitator of modern edge computing, allowing businesses to provide high-performance, scalable, and efficient digital infrastructure to remote locations. As the volume of data generated at the network edge continues to grow—driven by IoT, AI, and 5G applications—edge virtualization provides the critical layer of abstraction and management to manage compute capacity close to data sources. This article offers an in-depth exploration of edge virtualization from experience in the industry and real-world implementation from leading sources.

Covered Contents

ToggleWhat Is Edge Virtualization?

Simple Definition in Plain Language

Edge virtualization refers to the placement of virtualized computing environments—e.g., virtual machines (VMs), containers, or virtual network functions (VNFs)—on edge hardware at, or near, the source of the data. This enables organizations to host multiple applications or services on a single edge device, enhancing resource utilization and cutting back on centralized processing requirements.

How It Works at the Edge

Edge virtualization at its foundation is based on hypervisors or container orchestrators to run isolated workloads on edge devices locally. These virtual platforms work in a standalone manner but use the same physical resources underneath. By moving computing capacity closer to where data is being generated, businesses can process data in real time, enable localized decision-making, and minimize dependence on wide-area networks (WANs) or public cloud services.

Difference from Traditional Virtualization

Conventional virtualization generally runs in centralized data centers with reliable power, cooling, and network connections. Edge virtualization, on the other hand, runs in decentralized, low-resource, and sometimes hostile surroundings. The distinction is location, tolerance for latency, infrastructure constraints, and the necessity to be responsive in real-time. The solutions have to be rugged, small, and able to run unattended.

Why Edge Virtualization Is Essential for Edge Computing

Enables Efficient Resource Allocation

Edge virtualization enables efficient hardware utilization by allowing multiple services to be deployed on the same physical infrastructure. This eliminates the requirement of dedicating individual functions to separate devices, which saves costs and improves operational efficiency, useful in space-limited industrial or outlying environments.

Reduces Latency and Bandwidth Usage

By working with data at the edge, edge virtualization creates a huge reduction in latency. It avoids the round-trip latency of sending data to and receiving it from centralized cloud centers. This is crucial for low-latency applications like industrial automation, autonomous systems, and smart surveillance.

Supports Scalability and Dynamic Workloads

Edge virtualization offers a platform that is easy to scale applications without hardware upgrades. Workloads can be deployed, updated, and controlled over the network, enabling them to easily move to dynamically changing business needs. Low-overhead container-based virtualization, like Kubernetes distributions like K3s, supports fast provisioning and orchestration at the edge.

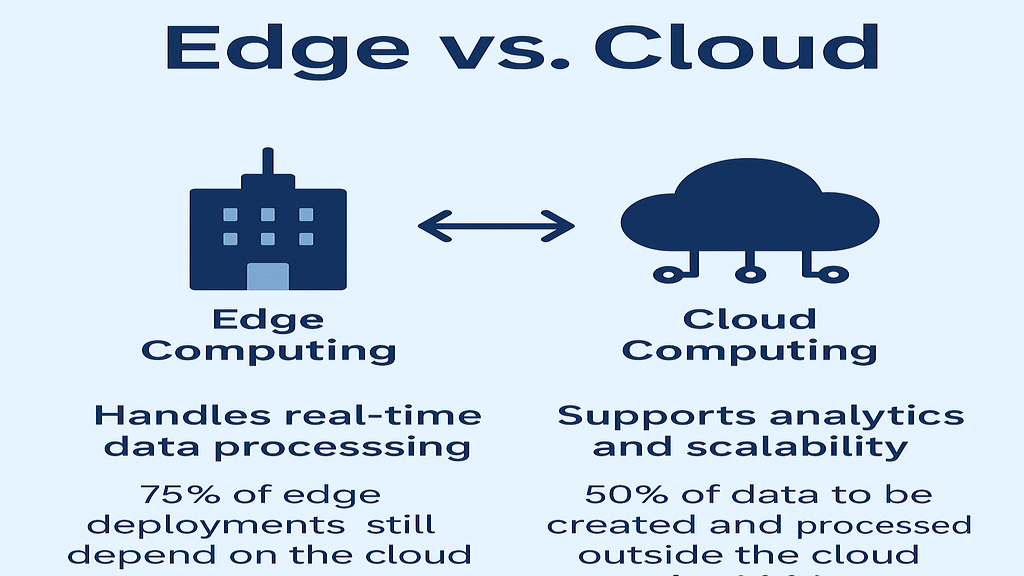

Edge Virtualization vs. Cloud and Core Virtualization

Deployment Location and Latency Differences

Cloud virtualization happens in massive, centralized centers, which are usually quite distant from end users. Core virtualization can reside in enterprise data centers. Edge virtualization, on the other hand, happens on-site or near the data source. This keeps latency low, an imperative need for applications that need immediate feedback.

Performance and Security Considerations

While cloud infrastructures provide widespread redundancy and centralized security controls, edge infrastructures are decentralized by nature and need localized security controls. Solutions need to take into account low processing resources, periodic connectivity, and physical security risks. Smart hardware like Lanner’s edge appliances is built with TPMs and hardware encryption for safe virtualization.

When to Use Edge, Cloud, or Hybrid Models

They all have a role to play. Edge virtualization is ideal for mission-critical, high-bandwidth, low-latency tasks. Cloud virtualization is well-suited for batch processing, long-term storage, and big data analysis. A hybrid model combines both, supporting real-time processing on the edge and in-depth analysis in the cloud, providing performance, cost-effectiveness, and resilience.

Use Cases of Edge Virtualization

Smart Manufacturing and Predictive Maintenance

Edge virtualization makes it possible to deploy condition monitoring and analytics software directly on the shop floor. Such software identifies equipment irregularities, anticipates breakdowns, and schedules maintenance in advance, reducing unexpected downtime and optimizing asset utilization.

Retail Analytics and Cashless Stores

Retailers use virtualized AI applications at the edge to analyze customer behavior, track inventory, and enable autonomous checkout. Edge computing provides real-time performance and zero-latency service even when the cloud is down. This maximizes customer satisfaction and operational efficiency.

Healthcare Remote Monitoring

Clinics and hospitals employ virtualized edge nodes to track patient vitals via connected health devices. Processing data in real time enables instant alerts and interventions, decreasing dependence on centralized systems and guaranteeing continuity of care, particularly in remote or underserved areas.

Smart Cities and Connected Vehicles

In smart Cities, Edge virtualization enables intelligent transportation systems, energy management, and public safety through local processing of traffic information, video streams, and IoT sensor data. In connected cars, it makes it possible for vital systems such as navigation, diagnostics, and infotainment to be executed on virtual machines on the vehicle’s onboard computer.

How Edge Virtualization Supports 5G, AI, and IoT

Network Slicing and MEC Integration

Edge virtualization is central to 5G architecture, notably in supporting network slicing. Network slices are virtualized, independent logical networks that utilize the same physical infrastructure. Operators deploy VNFs at the edge with Multi-access Edge Computing (MEC) to offer ultra-low-latency services directly from cellular base stations.

Real-Time Data Processing for AI

Virtualized environments run AI inference models on the edge without the necessity of sending large datasets to cloud servers. These applications include facial recognition, quality inspection, and natural language processing, where latency is critical. AI workloads also experience benefits from GPU acceleration in virtualized containers or VMs.

Enabling Low-Latency IoT Applications

Edge virtualization supports local processing of IoT data, and decisions can be made instantly at the gateway level or device level. This is important in such applications as power grids, logistics centers, and agriculture, where delays would jeopardize safety, productivity, or quality.

Role of SDN and NFV in Edge Virtualization

What Are SDN and NFV?

Software-defined networking (SDN) separates the control logic for the network from physical routers and switches so that it can be controlled centrally. Network functions virtualization (NFV) replaces special-purpose hardware with software-based functions running on commercial off-the-shelf (COTS) servers.

How They Facilitate Flexible Edge Infrastructure

Together, NFV and SDN enable edge networks to be more agile. Operators are able to deploy, configure, and scale network services such as routing, firewalling, and load balancing on demand with software rather than hardware. This significantly lowers deployment time and expense.

Use of VNFs at the Edge

Virtual network functions, gateways, firewalls, and deep packet inspection devices are installed on virtual machines or containers at the edge. Such VNFs improve network performance, security, and service availability alongside telecom-grade reliability in decentralized deployments.

Challenges and Future Trends in Edge Virtualization

Security and Data Protection at the Edge

Securing the edge environment is challenging because of physical access and network heterogeneity. Solutions needed to involve secure boot, TPM chips, hardware root of trust, and real-time monitoring. Complexity is also added due to regulatory compliance, particularly in healthcare and finance.

Containerization and Kubernetes on the Edge

Edge nodes usually possess scarce resources, making them require lightweight containerization platforms. Solutions such as K3s and MicroK8s provide the full functionality of Kubernetes with little resource overhead. Such solutions make automated orchestration, fault tolerance, and continuous deployment feasible at the edge.

Future Industry and Standards Adoption

Standards such as ETSI MEC and Open Platform for NFV (OPNFV) are propelling interoperability and vendor agnosticism. Industry uptake is accelerating as companies demand standardized deployment frameworks and ecosystems across cloud-to-edge. Open-source efforts are also growing, offering cost-competitive, modular alternatives to proprietary platforms.

Glossary: Key Edge Virtualization Terms You Should Know

➤ Edge Node: A physical or virtual compute appliance in proximity to the data source, utilized for processing and responding to data in real-time.

➤ Virtual Machine (VM): An emulation of a physical computer using software that has its operating system and applications.

➤ Hypervisor: A virtualization platform to enable several VMs to be executed on one physical server, controlling resource allocation and isolation.

➤ Containerization: A light-weight virtualization that encloses applications and their dependencies within isolated environments, usually controlled by Kubernetes.

➤ MEC (Multi-access Edge Computing): A framework that brings cloud capabilities to the edge of mobile networks, lowering latency and providing real-time services.