Covered Contents

ToggleINTRODUCTION

With its promise of durability, transparency, and user control, the decentralization model has revolutionized industries ranging from social networking to finance. However, the concept breaks down when applied to data centers, which are the foundation of the global digital fabric. Real-world economic, practical, and technical factors confine the company to hyperscale facilities centralized by giants like Amazon, Google, and Microsoft, despite the temptation to disperse data processing and storage over countless tiny nodes. This article examines why data centers still can’t achieve decentralization and how the industry is changing to balance local needs with efficiency.

The Operational and Economic Factors of Centralization

Because of economies of scale, which occur when larger operations lower costs per unit, centralized data centers are dominant. Hyperscale facilities, which are frequently millions of square feet in size, rely on the ability to buy servers, cooling systems, and electricity in bulk. For instance, providers can use negotiated rates to lower hardware and electricity costs for a data center campus that houses hundreds of thousands of servers, something smaller, decentralized setups cannot afford.

Another game-changer is energy efficiency. Big hubs spend money on efficient sites close to renewable energy sources and state-of-the-art cooling technologies, including Google’s AI-based systems that cut energy consumption by 40%. However, small data centers lack the funding for green innovations and have less effective local grids. Hyperscale data centers are 20–30% more energy-efficient than their smaller equivalents, according to a 2023 analysis, exposing the environmental cost of decentralization.

Operational expertise is also important. Centralized nodes employ specialized personnel to manage security, maintenance, and disaster recovery around the clock. Duplicating this knowledge at hundreds of thousands of nodes would be necessary for decentralization, which would provide a logistical and financial challenge. To put this into perspective, Amazon Web Services (AWS) maintains uptime by using clusters of data centers, or “availability zones”. Replicating this with micro-centers would be more expensive and complex, leading to lower service quality.

Technical and Security Limitations

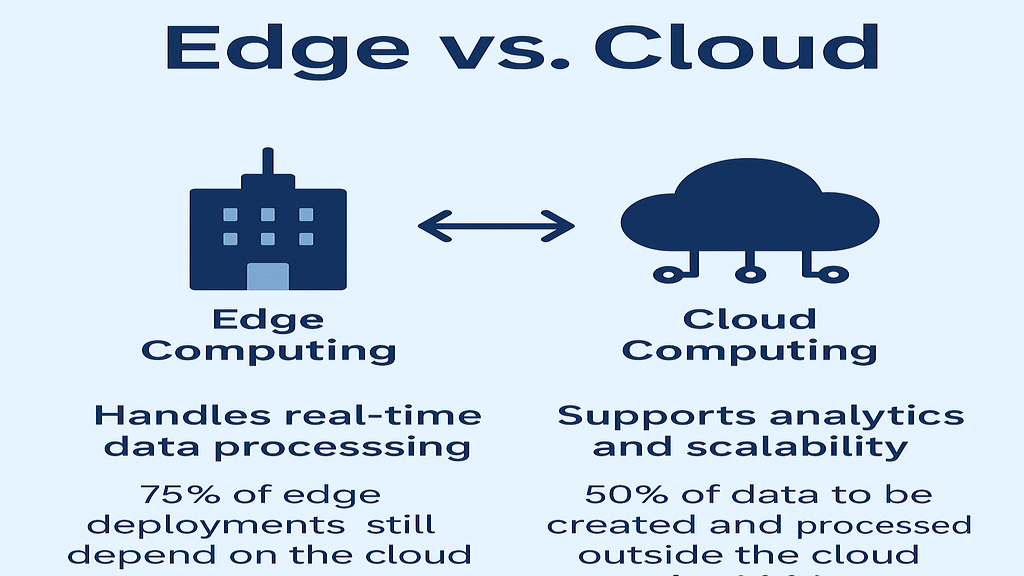

Decentralized data centers, according to their proponents, would lower latency by putting infrastructure closer to the user. While edge computing—processing data at the point of creation—has gained popularity for edge applications such as streaming media and Internet of Things devices, it is a supplement to centralized systems rather than a replacement for them. For example, Netflix maintains centralized hubs for storage, analytics, and updates while using edge servers for local caching. Deep processing tasks, like AI training or real-time financial simulations, require the massive power of hyperscale servers.

Security is a major obstacle; centralized data centers are cyber fortresses with biometric access, AI threat detection, and guards. It is risky to store data over numerous nodes. Frequent security fixes on hundreds of sites are prone to errors or delays, and every location is a possible point of entry for breaches. According to IBM’s 2022 Cost of a Data Breach Report, 83% of companies have several breaches, frequently as a result of dispersed systems’ widespread security.

Decentralization is further complicated by redundancy and dependability. Hyperscale hubs use redundant fiber connections, redundant computers, and backup power supplies to ensure uptime. It is not economical to scale such redundancy to millions of tiny nodes. According to the Uptime Institute, power or cooling failures account for 60% of data center outages; this risk is increased in smaller facilities with fewer resources. If nodes are not distributed globally, natural calamities like earthquakes or floods can potentially disrupt decentralized networks, bringing us full circle to the economic constraints of scale.

Regulatory and Compliance Complexities

Data storage and transfer are subject to stringent requirements under data privacy laws like the California Consumer Privacy Act (CCPA) and the General Data Protection Regulation (GDPR) of the European Union. By pooling audits and restricting data jurisdiction, centralized systems make compliance simpler. Decentralization disperses data among nations with different legal frameworks. It is unclear who is legally liable when one node in a non-compliant nation handles user data improperly. Due to localized legal knowledge and audits across dispersed nodes, Microsoft calculates that the cost of compliance for decentralized systems is five times higher than that of centralized systems.

Constraints on human resources make things more difficult. There aren’t many people with the network awareness, cybersecurity, and hardware support abilities needed to run a data center. Decentralization would necessitate either remote-controlled technologies with latency and security compromises, or experienced specialists dispersed throughout a workforce, which is an impractical requirement. The latter deprives the very benefit that decentralization offers.

The Path Forward: Hybrid Models and Controlled Innovation

Instead of drastic decentralization, the future of data infrastructure will be a hybrid architecture that combines edge computing agility with centralized efficiency. At the forefront of this equilibrium are Microsoft and Meta. Microsoft’s undersea data center project, Project Natick, combines edge nodes for low-latency applications in addition to a centralized scale for power efficiency. Likewise, Meta’s solar-powered data centers show how sustainability and centralization may coexist.

Although they provide a window into the decentralized future, blockchain-based storage networks like Filecoin and Storj remain specialized because of their high prices and limited scalability. The cost of storing 1TB on decentralized systems is 2-3 times higher than that of AWS, and transaction speeds are significantly slower than in the conventional system. Blockchain cannot compete with hyper scale players unless that gap is closed.

Centralized hubs manage heavy tasks like AI training and global data analysis. Edge computing handles time-sensitive tasks, such as autonomous car decisions. Advances in modular data centers and renewable energy could enable smaller, greener facilities. However, centralized control must remain the priority.

Conclusion: The Pragmatic Case for Centralization

Data center decentralization is not impossible, but it is nearly impossible given the current state of technology and the economy. The theoretical advantages of resiliency and latency minimization are outweighed by the costs of fragmentation, which include higher energy consumption, security threats, regulatory confusion, and inflated operational overheads. With the help of edge computing and artificial intelligence, hyperscale centers provide the dependability, efficiency, and security that modern digital ecosystems require.

The story is about making both approaches as effective as possible, not about centralization versus decentralization. The emphasis will be on wiser centralization as infrastructure develops, with edge nodes supporting local needs and larger, greener, and more responsive facilities.